1. The McGurk Effect (1976)

2. How modalities can affect/intersect between each other? (4 Potential Scenarios)

- Multimodal redundancy: Each modality data is redundant to each other

- Multimodal enhancement: The intersection between modalities helps to reach the task more effectively

- Multimodal non-redundancy: Each modality complements each other

- Multimodal dominance: One modality dominates the other modality

3. Core Challenges

- Representation

- Learning how to represent and summarize in such a way that exploits the complementarity and redundancy of each modality is complex, specially when we are dealing with heterogeneous data.

- For example, audio waveforms need to be represented as a sequence of symbols (digital audio signals).

- Sound wave: They are similar to water waves. It follows a cycle where the wave starts, continues and ends with the start of the next wave. Sound waves behave similarly but in the air and we cannot see them.

- Frequency: Represents the number of wave cycles that occur in one second. We can only here frequencies from 20 cycles to 20K cycles. Everything below or over, we cannot here it.

- Pitch: Most sounds transmit with a complex array of frequencies. The pitch is the relative highness or lowness of a frequency.

- For example, audio waveforms need to be represented as a sequence of symbols (digital audio signals).

- Three ways

- join or fusion

- representation A + B

- coordination

- reprensentation A, representation B => coordination

- ex. training: multi-modalities / inference: single modality (e.g. translation)

- During the Training, we use text representation to enhance the audio representation.

- During the Inference, we have only the audio usable.

- fission

- multiple of representations of the combination

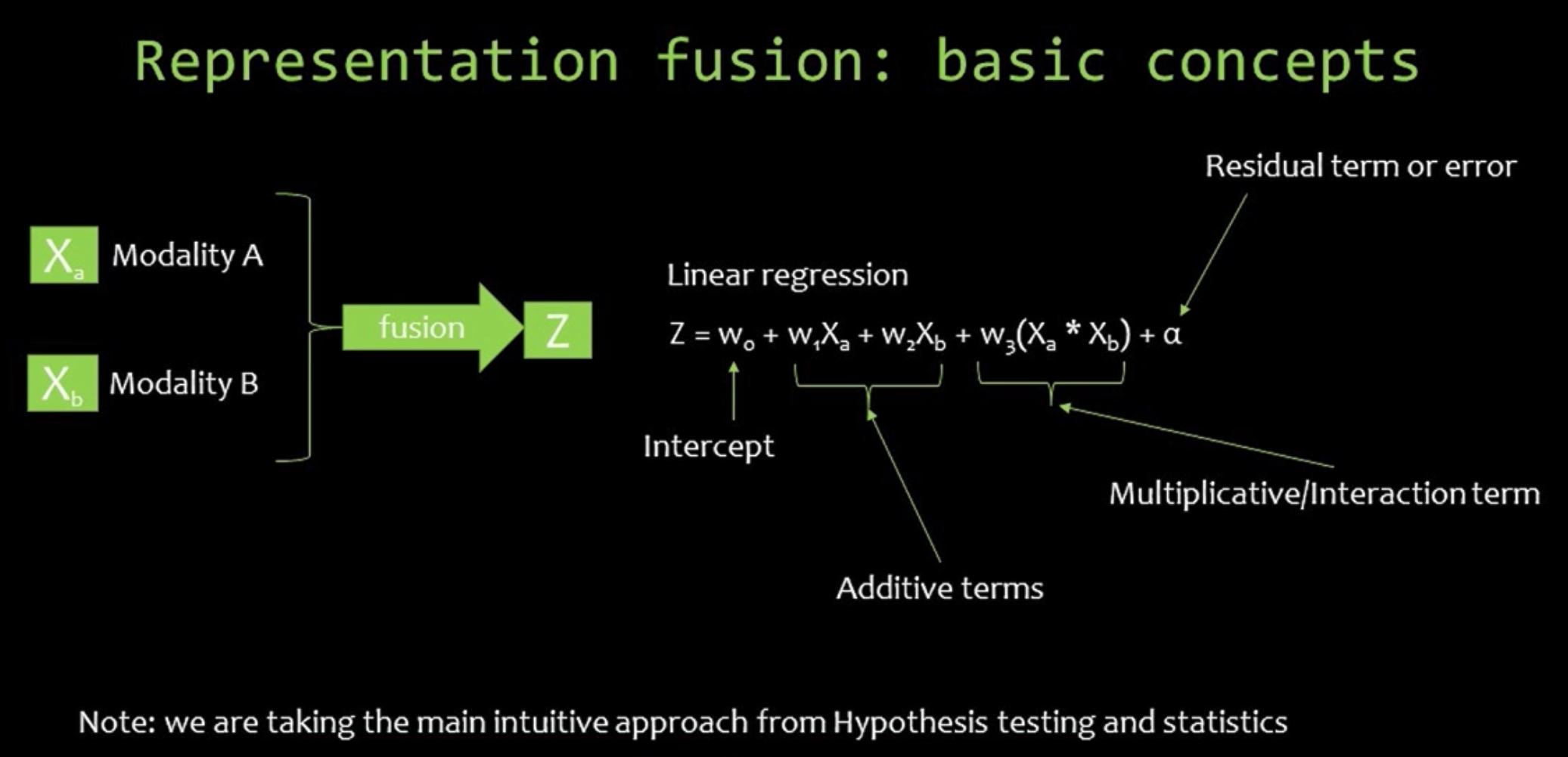

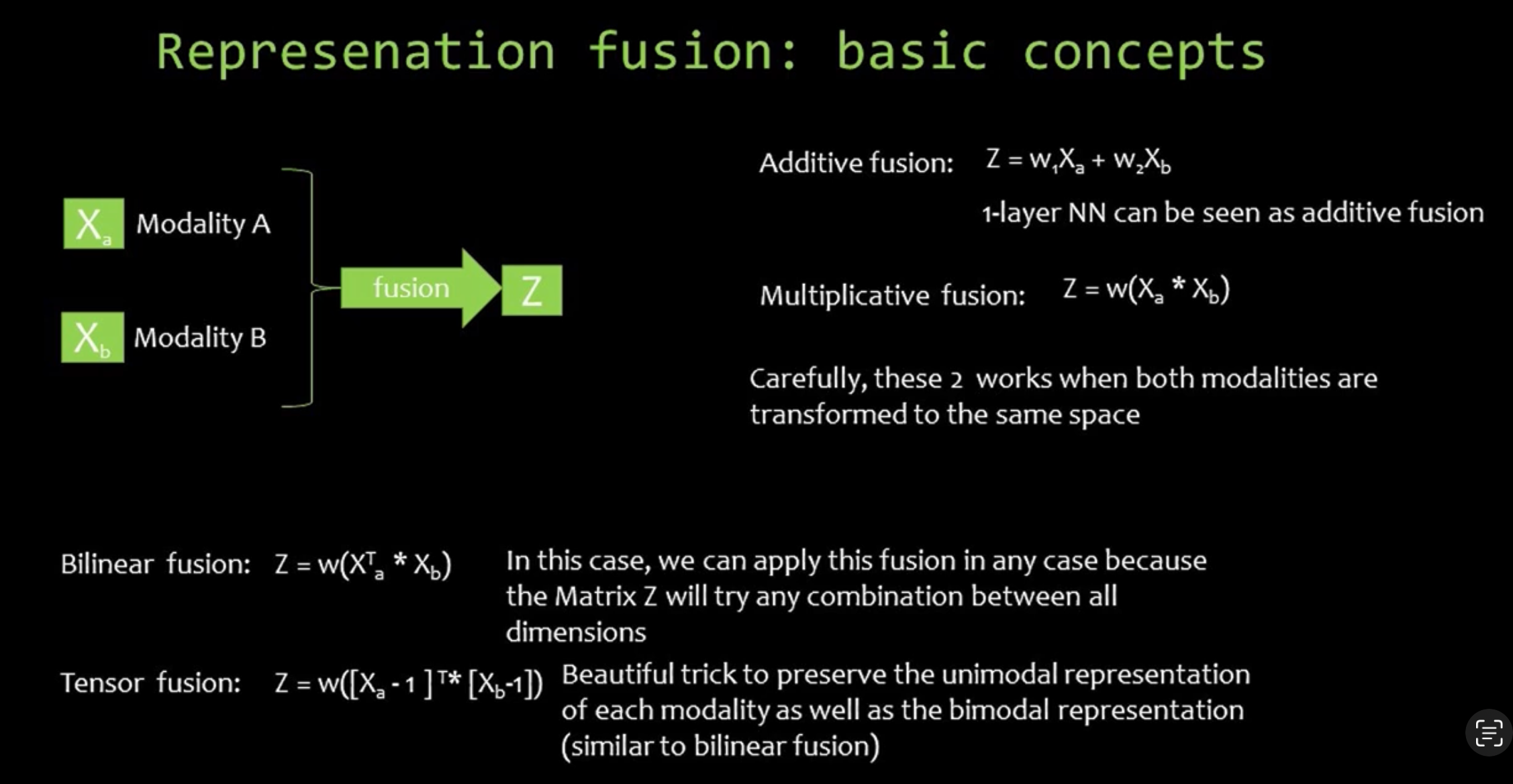

- join or fusion

- Bilinear fusion: It losses the univariate of the feature or the representation of the uni-modal as the result.

- How can we preserve the representations of the uni-model?

- Tensor fusions comes in the way.

- Tensor fusion's first column and last row preserves the representations of the uni-modal. And the rest of the features capture all the possibility of the combinations between two modalities.

- Of course, there are a tons of fusions:

- Low-rank fusion, Gated fusion, High-order polynomial fusion, Trimodal fusion, Modality-shifting fusion, Dynamic fusion, Nonlinear fusion, etc.

- For more detailed implementation of different types of fusion, please refer to the link at here.

- Alignment

- Identify the direct relations between elements from two or more different modalities.

- Most modalities have internal structures with multiple elements. These structures can have different sources:

- Temporal structures (ex. video & images)

- Spatial structures

- Hierarchical structures

- Explicit Alignment: Find correspondences between elements of different modalities.

- ex. Align each frame of a video with its correspondent text sentences.

- Implicit Alignment: Leveraging internally latent alignment of modalities to better solve a different problem

- ex. Visual question answering applications

- Attention mechanism comes in handy in the scenario.

- Translation

- It refers to the process of translating data from one modality to another.

- ex. sentence to image models

- Fusion

- It refers to the process of joining data/information from two or more modalities to perform prediction tasks.

- Two general approaches: model agnostic and model based approaches.

- Model Agnostic can be subdivided between early and late fusion. Basically they use uni-modal models and they are easy to implement (ex. concatenation).

- Early fusion/concatenation: Basically we concatenate the encoding of each representation of each modality.

- Late fusion: We perform the prediction/inference task for each modality and we apply majority vote between each prediction to determine the final prediction.

- Model based approaches can be subdivided into kernel-based methods, graphical models and neural networks.

- Model Agnostic can be subdivided between early and late fusion. Basically they use uni-modal models and they are easy to implement (ex. concatenation).

- Co-learning

- It refers to the process of transfer knowledge between modalities, including their representations and predictive models. (similar to Knowledge Distillation)

- Two main trends based on the training process: Parallel and Non-Parallel co-learning.

* Lecture note from Fastcampus Lecture: "[The RED] Deep Multimodal Learning Introduction"

'개발일지 > AI' 카테고리의 다른 글

| [Paper Review] Open FinLLM Leaderboard: Towards Financial AI Readiness (0) | 2025.03.15 |

|---|---|

| [Quantization] 이론 이해 및 정리 (0) | 2024.10.01 |